Things are moving so fast we need to specify the month, maybe the week. Here’s the things you should know- (definitely not written by AI)

Rigging the ATM

Up until recently the most effective way to set fire to money was to have a raging drug habit. Now, we have AI.

You’ve probably seen the articles about how related party transactions and ‘non binding, speculative, non committal letters of intent’ were driving the building of massive data centres based on non existent demand.

This all looks likely to stop soon. OpenAI has already pulled out of a $100b investment, Oracle’s investment in data centres is shaky, and OpenAI themselves are passing the hat around in the Middle East, looking for $50-100b before the end of March. Public opinion says they run out of money before their IPO at the end of 2026, but I have not seen any speculation about what happens if they don’t get their funds in March…

Open Source

OpenAI’s big bet was to get ‘too big to fail’ ie. suck up all the demand for AI and spend up big on each successive model, so that smaller companies could never compete. It really didn’t matter if they set fire to all that cash because they could have a monopoly on the market if they produced models better than others. ok I had forgotten the term ‘Blitzscaling’ which now makes it in the article because of my very smart friend Roger Hanney. That link is a great read.

But you can’t create a monopoly if some of your AI demand can be satisfied by a model that is ‘good enough’ and effectively free. In comes open source. What’s actually happened is that companies like DeepSeek, Llama, Qwen and Moonshot AI (Kimi ) and others have really strong alternatives.

Microsoft

Servicemax are currently getting several emails a day from Microsoft pushing their AI products. They will probably be great in the future, but they seriously overestimated the demand for the minimal utility they currently provide. Is the true cost of CoPilot more than the cost of M365 Business Premium?

Maybe, but at that price most people will pass.

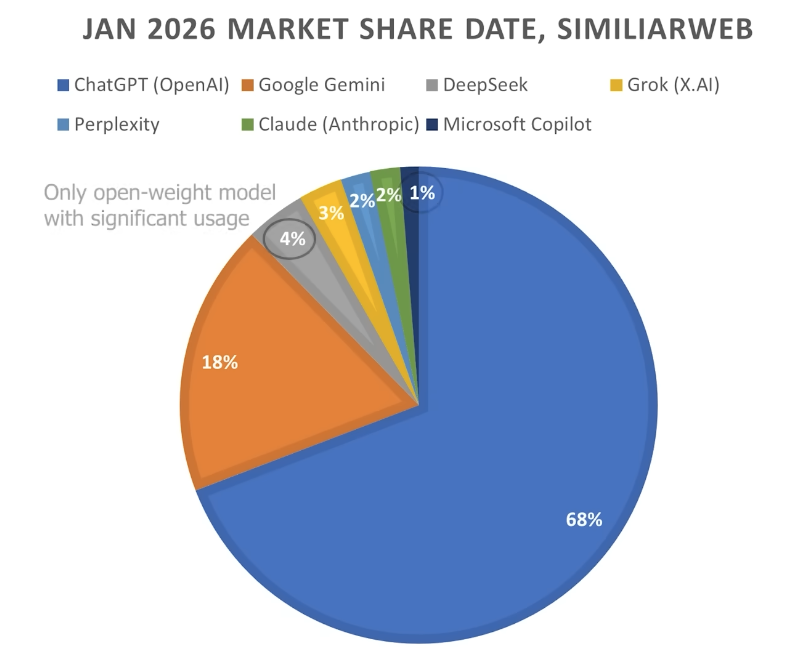

And here’s why Microsoft are freaking out- currently 1% market share-

SkyNet Became Self Aware Last Week

In case you missed it, everyone is currently talking about Clawdbot MoltBot OpenClaw– yes 3rd name in a week… it’s a collection of code that conducts agents that can actually do stuff for you. Of course it’s a bit rough and immediately did some epically dumb things, but it looks like it could be useful one day. That day is every day for some- it surpassed 100,000 Github stars within 3 days of becoming popular.

And it has a social network that humans cannot join. They can watch the conversation, but only agents can post, so you can watch the agents plot (or not) in real time.

If you think I’m being melodramatic by calling it SkyNet, please read the this (fictional) story and realise that it already has this capability…

This isn’t the first, or tenth time that AI agents have been allowed to escape the sandbox. I used littlebird.ai for a bit, and of course Microsoft, Google et al. also know that tools are a bit useless if you have to copy/paste backwards and forwards to get anything done.

What is kind of unique in this case is that so many people gave this tools so much power, in the hope that it would do something good. I’m personally invested because my wife’s name is Sarah Connor, but haven’t these people watched ANY Terminator movies?

Did the Market Fracture or Explode?

We are starting to realise that using an energetically expensive model to turn on your living room lights is sub optimal. So we are entering the era of specialisation, not just models that can code, but specialise. We’ve already been through the future shocks of Agents, Mixture of Experts, MCP servers, and increasing context length with deeper thinking.

Where are we for Maturity?

Look, fart jokes are always funny, so I’m not the person to ask. But if you think about the usability of language models (not just the large ones), we might propose a scale like this-

- Gives answers to questions

- Creates articles and documents

- Can take actions on your behalf

- Can take actions on your behalf safely

- Takes multiple actions to optimise your life

- Becomes an indispensable team member

- Turns you into a battery from The Matrix

We are somewhere between 3 and 4. It’s an exciting time, and just a little bit frightening. In our world of IT and tech support, we’re super excited about the possibilities, but also aware of practicalities- no one wants to talk to a machine that isn’t actually helping, perhaps we’re a little more amenable to one that helps a lot- but the value needs to be super clear.

If you want a better look at the evolutionary stages of AI (sadly no Matrix jokes) please read this article.

What’s Next?

These predictions will surprise no one, but here we go-

AI will become cheaper and more expensive, and less useful and more useful.

What?

Special cut down models are already super cheap to run, and we expect this trend to continue.

Commercial AI companies will eventually have to charge commercial prices for their products, so they will become more expensive. A model that knows your home network and specialises in recognising drunk speech to turn things on and off isn’t going to be useful at producing cat videos.

But the Next Big Thing is going to be seeing how useful the ‘thinking sand’ can be.